SQL vs NOSQL Key Differences

The Modern Database Dilemma: A Definitive Guide to SQL, NoSQL, and the Future of Data

Introduction: Moving Beyond the "Versus"

The discourse surrounding database technology has long been framed as a simple, two-sided battle: SQL versus NoSQL. This report will dismantle that simplistic narrative, arguing instead that the modern data landscape demands a more nuanced understanding. The choice is not about a single victor but about selecting the optimal tool for a specific job, a strategy that often leads to a hybrid approach known as "polyglot persistence". We will explore the philosophies, architectures, and practical trade-offs of each paradigm, providing a definitive guide for architects, developers, and technology leaders navigating this complex ecosystem.

The journey begins with Edgar F. Codd's groundbreaking 1970 paper, "A Relational Model of Data for Large Shared Data Banks," which established the mathematical and logical foundation for the relational databases that would dominate the industry for three decades. These systems, queried by Structured Query Language (SQL), brought order, consistency, and reliability to the world of data. However, the rise of the internet and the subsequent explosion of "Big Data" in the early 2000s created a new set of challenges. The sheer volume, velocity, and variety of data generated by web-scale applications—from social media posts and user-generated content to IoT sensor logs—strained the rigid, scale-up model of traditional relational databases. This pressure gave birth to the NoSQL movement, a diverse family of databases designed for a world that the relational model was never built to handle.

It is important to note that the user query for this report included an image related to the "0/1 Knapsack Problem." As this topic is unrelated to the core request concerning SQL and NoSQL databases, it will not be addressed in this analysis. The focus will remain exclusively on providing a comprehensive and exhaustive comparison of database technologies.

This report is structured in four parts. Part I will detail the fundamental philosophies that underpin SQL and NoSQL, exploring the "why" behind their designs. Part II will conduct a deep technical comparison across five key dimensions: data models, scalability, query languages, consistency models, and the developer experience. Part III will introduce NewSQL, an emerging class of databases that serves as an architectural bridge, attempting to reconcile the differences between these two worlds. Finally, Part IV will ground this entire discussion in real-world applications, detailed case studies, and practical strategy, culminating in a framework for making informed, effective database decisions for any project.

Part I: The Two Philosophies of Data

To truly understand the difference between SQL and NoSQL, one must look beyond the surface-level features and delve into the core philosophies that guide their design. These are not merely different technologies; they are different ways of thinking about data, integrity, and scale.

The World of SQL: A Foundation of Structure and Integrity

The SQL philosophy is rooted in mathematics, logic, and a fundamental belief in the importance of data integrity guaranteed by the database itself. It is a world of order, predictability, and consistency.

The Relational Model Explained

The foundation of SQL databases is the relational model, an elegant and intuitive method for structuring information. This model organizes data into tables, which are formally known as "relations." Each table is composed of columns (attributes) that define the data to be stored and rows (records or tuples) that represent individual entities. The true power of this model lies in its ability to establish logical connections—or relationships—between these tables. This is achieved through the use of keys. Each row in a table is assigned a primary key, a unique identifier for that specific record. When this primary key is included in another table, it becomes a foreign key, creating a logical link between the two tables. This system, often compared to a collection of interconnected spreadsheets, allows for the querying of related data from across the database without having to physically reorganize the tables themselves.

The Power of a Predefined Schema

A defining characteristic of SQL databases is their enforcement of a predefined schema. This "schema-on-write" policy dictates that the structure of the data—including tables, column names, data types (e.g., INTEGER, VARCHAR, DATETIME), and constraints—must be explicitly defined before any data can be written to the database. While this may seem rigid, it is a deliberate design choice that serves as the cornerstone of data integrity. By enforcing a strict structure, the database guarantees that all data is consistent, predictable, and reliable. Every application and every user interacting with the database can be certain of the shape and type of the data they will encounter, which simplifies application development and ensures a single, authoritative source of truth.

Deep Dive: The ACID Guarantees

The gold standard for transactional reliability in the database world is ACID compliance. ACID is an acronym for a set of four properties that ensure database transactions are processed reliably and maintain the integrity of the database, even in the face of errors or system failures.

* Atomicity: This property guarantees that a transaction is an "all-or-nothing" proposition. A transaction may consist of multiple individual operations, but Atomicity ensures that either all of these operations complete successfully, or none of them do. If any part of the transaction fails, the entire transaction is rolled back, and the database is left in its original state, as if the transaction never happened.

* Consistency: This ensures that every transaction brings the database from one valid state to another. The database has a set of predefined rules and constraints (e.g., a value in a column must be unique, a foreign key must point to an existing primary key). The Consistency property guarantees that any transaction that would violate these rules will be rejected, thus preventing data corruption.

* Isolation: This property addresses the challenge of multiple transactions running concurrently. Isolation ensures that the execution of one transaction is isolated from that of others. From the perspective of any given transaction, it appears as though it is the only one running on the system. This prevents issues like "dirty reads" (reading uncommitted data) and ensures that the final state of the database is the same as if the transactions had been executed one after another, serially.

* Durability: Durability guarantees that once a transaction has been successfully committed, it will remain committed. The changes made are permanent and will survive any subsequent system failure, such as a power outage or a server crash. This is typically achieved by writing transaction logs to non-volatile storage.

The Universe of NoSQL: A Response to Scale and Flexibility

The NoSQL philosophy was born out of necessity. It is not an outright rejection of the SQL model but a recognition that the rigid, structured world of relational databases was ill-equipped to handle the chaotic, massive, and fast-moving data of the modern internet. It is a world of flexibility, availability, and massive scale.

The "Not Only SQL" Ethos

The term NoSQL, often interpreted as "Not Only SQL," emerged in the early 2000s to describe a new generation of non-relational databases. These systems were engineered to address the limitations of traditional RDBMSs when faced with the challenges of web-scale applications. The primary drivers were the need to manage massive volumes of unstructured or semi-structured data—such as social media posts, user profiles, IoT sensor data, images, and application logs—and the requirement for horizontal scalability to handle immense traffic loads. The ethos was not to replace SQL entirely, but to provide an alternative for use cases where the relational model was a bottleneck.

Embracing Chaos: The Freedom of a Dynamic Schema

In direct contrast to SQL's schema-on-write policy, NoSQL databases champion a flexible, dynamic schema, often described as "schema-on-read". This means that data can be stored without a predefined structure. In a document database, for example, two documents within the same collection can have entirely different fields and data types. This flexibility is a massive advantage for agile development teams. It allows for rapid iteration and the addition of new application features without the need to perform complex and time-consuming database migrations. Developers can simply start storing new data structures as their application evolves.

Deep Dive: The CAP Theorem and the BASE Model

Where SQL databases are defined by ACID, distributed NoSQL systems are fundamentally constrained by the CAP Theorem. This theorem, formulated by computer scientist Eric Brewer, posits that it is impossible for a distributed data store to simultaneously provide more than two of the following three guarantees :

* Consistency: Every read operation receives the most recent write or an error. In a consistent system, all nodes see the same data at the same time.

* Availability: Every request receives a (non-error) response, without the guarantee that it contains the most recent write. The system is always available to serve requests.

* Partition Tolerance: The system continues to operate despite an arbitrary number of messages being dropped (or delayed) by the network between nodes.

In any real-world distributed system that communicates over a network, network partitions are a fact of life. Therefore, a distributed database must be partition tolerant. This forces a direct trade-off between strong consistency (C) and high availability (A). A system can choose to be strongly consistent by, for example, refusing to respond to a request if it cannot confirm it has the latest data, thereby sacrificing availability. Or, it can choose to be highly available by responding with the data it has, even if it might be slightly stale, thereby sacrificing strong consistency.

This trade-off leads many NoSQL systems to adopt the BASE philosophy as an alternative to ACID :

* Basically Available: The system guarantees availability, in the sense of the CAP theorem.

* Soft State: The state of the system may change over time, even without input, due to the eventual consistency model.

* Eventually Consistent: If no new updates are made to a given data item, all accesses to that item will eventually return the last updated value.

This model prioritizes availability and performance over the immediate, strict consistency of ACID. This is perfectly acceptable for many modern use cases. For example, if a user's "like" on a social media post takes a few seconds to propagate to all users worldwide, the system remains functional and the user experience is not significantly impacted. In contrast, if a financial transaction were to be only "eventually consistent," the consequences would be catastrophic.

The core philosophical difference between these two paradigms extends beyond their structure; it fundamentally alters where and when data integrity is enforced. SQL databases act as the central guardian of data correctness. Through their rigid, predefined schemas and strict ACID properties, they enforce integrity at the point of writing. Any application attempting to write data that violates the established rules will be rejected by the database itself. This creates a single, reliable source of truth that all consuming applications can trust implicitly.

NoSQL databases, with their flexible schemas, take a different approach. They often delegate the responsibility of data validation to the application layer, enforcing structure at the point of reading. The database will accept varied data structures without complaint, but the application code is now burdened with the task of interpreting, validating, and handling potentially inconsistent data. As developer discussions reveal, this can lead to situations where one service writes a field as a string while another expects it to be an integer, causing the reading application to fail. This represents a crucial architectural trade-off. The SQL approach centralizes data governance, which can slow down initial development but provides robust, long-term consistency. The NoSQL approach decentralizes governance, enabling faster iteration and greater flexibility but placing a higher premium on developer discipline, robust testing, and clear communication between teams to prevent "schema drift" and maintain data quality over time. The choice, therefore, has direct and lasting implications on team structure, development velocity, and the total cost of ownership.

Part II: A Five-Point Technical Showdown

Moving from high-level philosophy to practical implementation, this section provides a granular, feature-by-feature comparison of SQL and NoSQL databases across five critical technical dimensions. Understanding these differences is essential for making sound architectural decisions.

1. Data Models: The Shape of Your Information

The way a database organizes data fundamentally dictates its strengths, weaknesses, and ideal use cases.

* SQL (Relational): SQL databases adhere to a single, powerful data model: the relational model. Data is strictly organized into tables with predefined rows and columns. This tabular structure is optimized for storing structured data and representing complex, many-to-many relationships through the use of primary and foreign keys. Its uniformity and logical rigor make it a general-purpose powerhouse for a wide array of applications built on well-understood data.

* NoSQL (Non-Relational): NoSQL is not a single model but rather a diverse family of data models, each highly specialized for a particular type of data and access pattern. The four primary types are:

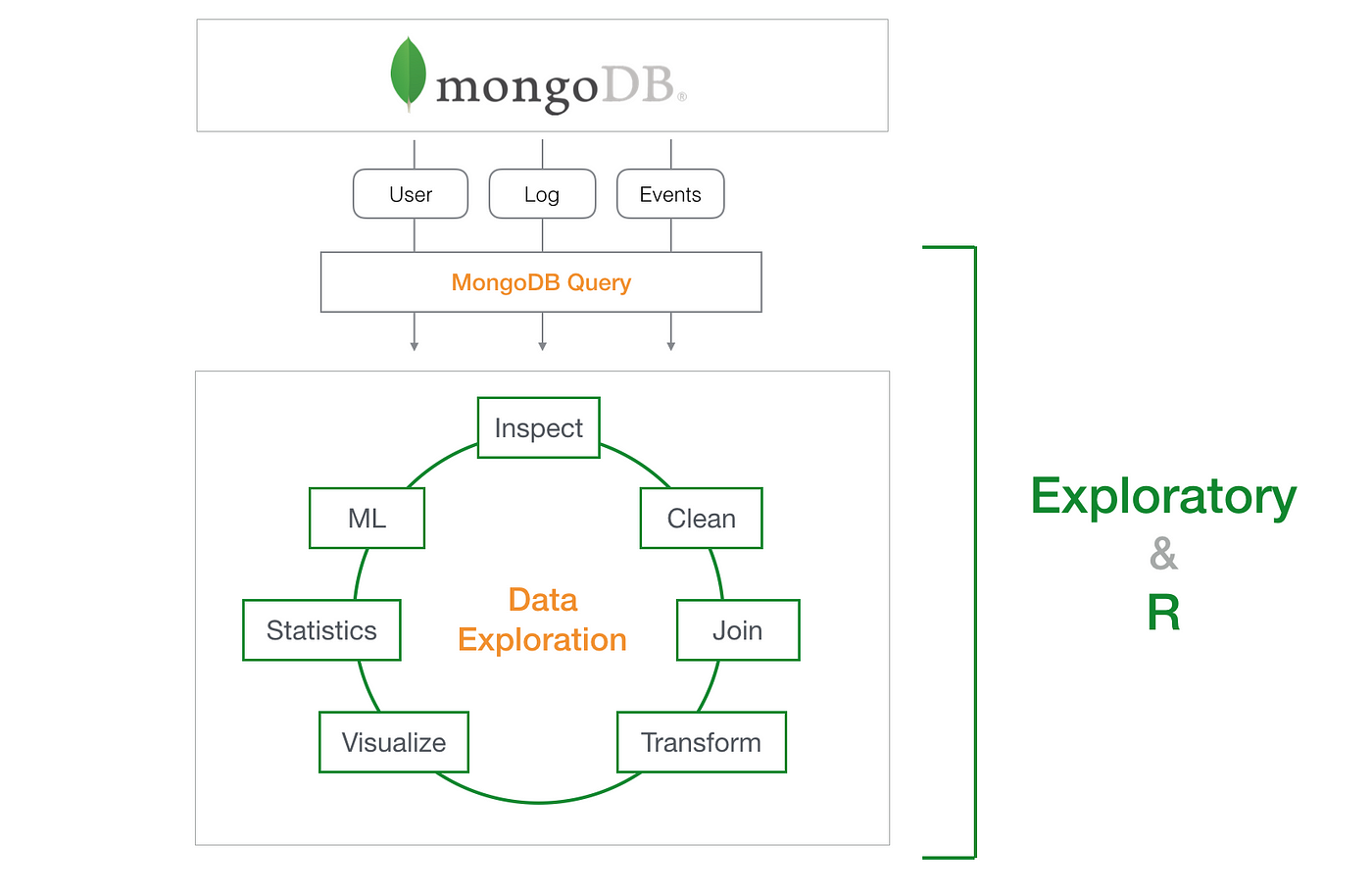

* Document Databases: These databases, exemplified by MongoDB and CouchDB, store data in flexible, semi-structured documents, most commonly using formats like JSON (JavaScript Object Notation) or BSON (Binary JSON). This model is highly intuitive for developers, as the document structure can closely mirror the objects used in application code. This makes them an excellent choice for content management systems, product catalogs, and user profiles, where each entity may have a unique and evolving set of attributes.

* Key-Value Stores: Representing the simplest NoSQL model, key-value stores like Redis and Amazon DynamoDB function like a massive dictionary or hash map. Data is stored as a collection of key-value pairs, where each unique key maps to a specific value. This architecture is optimized for extremely fast read and write operations for simple lookups by key, making it perfect for applications like high-performance caching, session management, and real-time gaming leaderboards.

* Wide-Column Stores: Databases like Apache Cassandra and Google Bigtable organize data into tables, rows, and columns, but with a crucial difference from the relational model: the names and format of the columns can vary from row to row within the same table. They are designed to efficiently handle massive datasets with very high write throughput. This makes them exceptionally well-suited for time-series data, IoT applications, and large-scale analytics workloads.

* Graph Databases: Systems like Neo4j and Amazon Neptune are purpose-built to store and navigate relationships. Data is modeled as a graph, consisting of nodes (which represent entities) and edges (which represent the relationships between those entities). In this model, relationships are not a computational afterthought but are stored as first-class citizens. This makes traversing complex, interconnected data incredibly efficient, providing a powerful solution for social networks, fraud detection, identity and access management, and real-time recommendation engines.

The choice of a NoSQL data model is a high-stakes architectural decision with profound and lasting consequences. Unlike the SQL world, where different RDBMS vendors offer largely interchangeable, general-purpose relational models, the various NoSQL models are highly specialized and not interchangeable. Each model is optimized for a specific data shape and a corresponding set of query patterns. Attempting to force a data structure into an ill-fitting NoSQL model can lead to disastrous outcomes, negating the very performance and flexibility benefits that drive NoSQL adoption in the first place. For instance, modeling a highly interconnected social network in a document database would be profoundly inefficient. It would necessitate either massive data duplication within documents or an unmanageable number of application-level queries to simulate relationships, effectively re-implementing slow JOINs in the application code. Conversely, a graph database is explicitly designed for this task, making relationship traversal trivial. This reality places a significant burden on architects to deeply understand their application's data and, critically, its primary access patterns before committing to a specific NoSQL model. The initial choice of NoSQL type is far more critical and difficult to reverse than the choice of a specific SQL vendor.

2. Scalability: How to Grow Without Breaking

Scalability refers to a system's ability to handle a growing amount of work. SQL and NoSQL databases were born in different eras and, as a result, approach this challenge from fundamentally different directions.

* Vertical Scaling (Scale-Up): This is the traditional method for scaling a SQL database. To handle increased load, you enhance the resources of the single server on which the database runs. This means adding more powerful CPUs, increasing the amount of RAM, or upgrading to faster SSD storage. This approach is straightforward to implement and manage. However, it has two major drawbacks: it can become prohibitively expensive at the high end, and there is always an ultimate physical limit to how powerful a single machine can be.

* Horizontal Scaling (Scale-Out): This is the native scaling model for most NoSQL databases. Instead of making one server more powerful, you distribute the load across a cluster of multiple, often less-expensive, commodity servers or nodes. As traffic and data volume grow, you simply add more nodes to the cluster. This approach is highly cost-effective, aligns perfectly with modern cloud computing infrastructures, and offers virtually limitless scalability. This is often achieved through a process called sharding, where data is partitioned across the different nodes, a feature that is built-in and automated in many NoSQL systems.

It is important to recognize that this dichotomy, while a useful rule of thumb, is not absolute. SQL databases can be scaled horizontally through techniques like read replicas and manual sharding (partitioning). However, this process is typically complex to implement, not natively supported by the database engine, and introduces significant operational overhead and application-level complexity. NoSQL databases, in contrast, were designed from the ground up for a distributed, scale-out world.

3. Query Languages: How We Talk to Our Data

The language used to interact with a database is a direct reflection of its underlying data model and philosophy.

* SQL (Structured Query Language): The defining feature of relational databases is their use of SQL, a powerful, declarative, and highly standardized language governed by ANSI and ISO standards. Its universality is a major strategic advantage; a developer with SQL skills can be productive across a wide range of RDBMSs like MySQL, PostgreSQL, and Microsoft SQL Server. SQL's syntax is engineered to perform complex operations, most notably JOIN clauses that can efficiently combine data from multiple tables based on their relational links.

* NoSQL (Variable Languages): In the NoSQL universe, there is no single, standardized query language. Each database typically provides its own unique query language or API, which is specifically tailored to interact with its distinct data model in the most efficient way possible.

* MongoDB Query Language (MQL): MongoDB uses a rich query language whose syntax is based on JSON. Queries are constructed as documents, which allows developers to express complex filtering and updating logic in a format that is native to JavaScript and many other modern programming languages. This makes MQL particularly intuitive for developers building web applications.

* Cassandra Query Language (CQL): CQL was intentionally designed with a syntax that is very similar to SQL. This was a strategic choice to lower the barrier to entry for the vast number of developers already familiar with relational databases. However, this similarity is largely superficial. CQL lacks support for critical SQL features like JOINs, subqueries, and complex multi-row transactions, a direct reflection of Cassandra's distributed, denormalization-focused architecture.

* Cypher (for Neo4j): Cypher is a declarative, visual query language purpose-built for graphs. Its most distinctive feature is the use of ASCII-art to create patterns that visually represent the nodes and relationships a user wants to find. For example, (user)-->(friend) is a simple pattern to find friends of a user. This pattern-matching approach makes Cypher exceptionally expressive and readable for complex, multi-hop relationship queries that would be cumbersome and slow in SQL.

* Redis Commands: Redis, as a key-value store, is not typically queried with a declarative language. Instead, it is manipulated via a set of direct commands sent through a command-line interface or client library. Commands like SET, GET, HSET (for hash fields), and FT.SEARCH (for full-text search) provide direct, high-performance access to its underlying data structures.

To provide a practical, at-a-glance comparison, the following table demonstrates how a conceptually similar task—finding a user and their related orders—is accomplished across these different paradigms. This highlights how the query language is a direct manifestation of the database's core data model.

| Task | SQL (e.g., PostgreSQL) | MongoDB (MQL) | Neo4j (Cypher) | Cassandra (CQL) |

|---|---|---|---|---|

| Find user 'Alice' and her orders | SELECT u.name, o.order_id, o.amount FROM Users u JOIN Orders o ON u.user_id = o.user_id WHERE u.name = 'Alice'; | db.users.findOne({ name: "Alice" }, { name: 1, orders: 1 }); | MATCH (u:User {name: 'Alice'})-->(o:Order) RETURN u.name, o.order_id, o.amount; | SELECT order_id, amount FROM orders_by_user WHERE user_name = 'Alice'; |

| Language Insight | Relational JOIN is central. | Document-oriented find on embedded data. | Graph pattern matching. | Query on a pre-built, denormalized table. |

As the table illustrates, each language is optimized for its model. SQL uses its powerful JOIN to link separate tables. MQL performs a simple find operation, retrieving the orders that are embedded directly within the user document, avoiding a join entirely. Cypher uses its intuitive pattern matching to traverse the relationship between a user and their orders. CQL requires that the data be pre-modeled for this specific query in a denormalized table, demonstrating its query-first design philosophy.

4. Consistency: The Transactional Divide

This section revisits the ACID and BASE philosophies from a practical, use-case-driven perspective. The choice between them represents a fundamental trade-off between absolute data integrity and high system availability and performance.

* When ACID is Non-Negotiable (SQL): There is a class of applications where data accuracy is not just a feature but a core business and, in some cases, legal requirement. In these scenarios, the strict transactional guarantees of ACID are non-negotiable. Examples include:

* Financial and Banking Systems: Every transaction, from an ATM withdrawal to a stock trade, must be processed with absolute atomicity and durability.

* E-commerce Checkout and Payment Processing: An order must be linked correctly to a payment and an inventory deduction. Inconsistent data here leads directly to lost revenue and customer dissatisfaction.

* Enterprise Resource Planning (ERP) and Customer Relationship Management (CRM) Systems: These systems are the central nervous system of a business, built on well-defined, structured data where consistency across modules is paramount.

* When Availability is King (NoSQL): There is another class of applications, particularly at web scale, where maintaining 100% uptime and responsiveness is more critical than ensuring every piece of data is perfectly consistent across the globe at every microsecond. For these systems, the BASE model and its eventual consistency are often the superior choice. Examples include:

* Large-Scale Social Media: A user's feed must always load quickly. It is acceptable if a new post or a "like" takes a few moments to propagate through the system.

* Real-Time Analytics and Logging: These systems are designed to ingest massive volumes of data at high velocity. Prioritizing write availability ensures no data is lost during ingestion.

* Content Management Systems and Product Catalogs: It is more important for a user to always be able to view a product catalog than for a minor price update to be reflected instantly for every user simultaneously.

This distinction is not as rigid as it once was. The lines are blurring as the technologies evolve. Notably, some leading NoSQL databases, including MongoDB, now offer multi-document ACID transactions, providing developers with the ability to enforce strong consistency when needed, even within a flexible, non-relational model. This allows for a more nuanced approach, where developers can choose the level of consistency required on a per-operation basis.

5. The Developer Experience: Trade-offs in Practice

The choice of a database technology has a profound impact on the day-to-day lives of developers, influencing everything from hiring and training to development speed and long-term maintenance.

* Maturity and Community (SQL): Having been the industry standard for nearly half a century, the SQL ecosystem is immensely mature and stable. This translates to several key advantages for development teams: a massive global talent pool of experienced SQL developers, extensive and high-quality documentation, a vast array of battle-tested third-party tools for management and analysis, and vibrant community support forums where nearly every conceivable problem has already been asked and answered. For many organizations, this low-risk, high-support environment is a decisive factor.

* Flexibility and Speed (NoSQL): The dynamic schema of NoSQL databases is frequently cited as a major catalyst for developer productivity and agile development methodologies. The ability to add new fields and evolve the data model on the fly, without performing complex and potentially service-disrupting database migrations, allows development teams to iterate on new features much more quickly. This can significantly reduce the time-to-market for new applications and products.

* The Learning Curve and Standardization: SQL is a single, standardized language. An investment in learning SQL is highly portable across a wide range of different RDBMS products. The NoSQL landscape, by contrast, is fragmented. Each database has its own query language and data modeling paradigm, meaning skills are less transferable. A developer who is an expert in MongoDB's document model and MQL may have a steep learning curve when moving to Neo4j's graph model and Cypher query language.

* Operational Complexity: While a developer can get an application up and running quickly with a single-node NoSQL instance, managing a large, distributed, multi-node NoSQL cluster in production can be significantly more complex than managing a single, vertically-scaled SQL server. Ensuring data consistency, managing replication, planning for node failures, and executing backups in a distributed environment are non-trivial operational challenges that require specialized expertise.

Part III: The Synthesis - NewSQL, The Bridge Between Worlds

For years, the database world was defined by a difficult trade-off: the strong consistency and familiar SQL interface of relational databases versus the massive horizontal scalability and flexibility of NoSQL. This dichotomy forced architects to choose one set of benefits at the expense of the other. However, a new class of database systems, collectively known as NewSQL, has emerged to challenge this compromise, offering a powerful synthesis that combines the best of both worlds.

Defining NewSQL

NewSQL is a class of modern relational database management systems (RDBMS) designed to provide the horizontal scalability and high performance of NoSQL systems while retaining the strict ACID transactional guarantees and standard SQL interface of traditional relational databases. The term, first coined by 451 Research analyst Matt Aslett in 2011, describes a category of databases built from the ground up for distributed environments. They are not a simple evolution of legacy SQL systems, nor are they a relational veneer on top of a NoSQL core. They represent a fundamental re-architecting of the relational database to meet the demands of modern, high-throughput, mission-critical applications that cannot sacrifice either scale or consistency.

Architectural Spotlight: How They Do It

NewSQL systems achieve this ambitious goal through a combination of innovative architectural patterns that were not available to the designers of legacy RDBMSs.

* Distributed, Shared-Nothing Architecture: At their core, NewSQL databases are distributed systems. Like their NoSQL counterparts, they are designed to run on clusters of commodity servers. They employ a "shared-nothing" architecture, where each node in the cluster is independent and has its own processor, memory, and disk. Data is partitioned (or sharded) across these nodes, allowing the system to scale horizontally by simply adding more nodes to the cluster.

* Consensus Protocols: Maintaining transactional consistency across a distributed cluster is a major computer science challenge. NewSQL databases solve this by implementing sophisticated consensus algorithms, such as Raft or Paxos. When a transaction is initiated, the nodes in the cluster use the consensus protocol to agree on the outcome of the transaction before it is committed. This ensures that all nodes have a consistent view of the data and that ACID properties are maintained, even in the face of node failures.

* In-Memory Processing & Lock-Free Concurrency: To achieve extreme performance, some NewSQL systems, most notably VoltDB, are designed as in-memory databases. By keeping the active dataset in RAM, they eliminate the latency associated with disk I/O. Furthermore, VoltDB utilizes a novel approach to concurrency. It partitions the data and then executes transactions serially (one at a time) within each partition on a single thread. Because there is no concurrent access to data within a partition, this design completely eliminates the need for traditional locking and latching mechanisms, which are a major source of overhead and performance bottlenecks in legacy RDBMSs.

* Hybrid Transactional/Analytical Processing (HTAP): A key innovation in the NewSQL space is the ability to handle both transactional (OLTP) and analytical (OLAP) workloads within a single system. Databases like TiDB achieve this with a hybrid storage architecture. They use a row-based storage engine (TiKV) optimized for fast transactional writes and point reads, and a separate columnar storage engine (TiFlash) optimized for large-scale analytical queries. Data is replicated in real-time between the two, allowing businesses to run complex analytics on live, up-to-the-second transactional data without impacting the performance of their primary OLTP application.

Deep Dive on NewSQL Architectures

To better understand these principles in practice, let's examine the architectures of three prominent NewSQL databases.

* CockroachDB: This database is designed to be a geographically distributed, resilient SQL database that is wire-compatible with PostgreSQL, making it easy to adopt for teams with existing SQL expertise. Its architecture is composed of symmetric nodes, meaning any node can handle read and write requests. Under the hood, CockroachDB is a distributed, transactional key-value store. The SQL layer translates standard SQL queries into key-value operations. Data is partitioned into ranges, and these ranges are replicated across the cluster. The Raft consensus protocol is used to ensure that all replicas of a range agree on any changes, guaranteeing strong, ACID-compliant consistency even during network partitions or node failures.

* TiDB: TiDB is a highly MySQL-compatible NewSQL database that features a decoupled architecture, separating the computing and storage layers. This allows for greater flexibility and independent scaling. It consists of three core components: the TiDB Server, which is a stateless SQL processing layer that handles query parsing and optimization; the TiKV Server, which is the distributed, transactional key-value storage engine where the actual data resides; and the Placement Driver (PD), which acts as the "brain" of the cluster, managing metadata, allocating transaction timestamps, and orchestrating data balancing across the TiKV nodes.

* VoltDB: VoltDB is an in-memory relational database engineered for extreme throughput and low-latency on transactional workloads. Its architecture is built on two key principles: sharding and single-threaded execution. The database is partitioned across the cores of the CPU cluster. All transactions are executed as pre-compiled Java stored procedures. For any given partition, these stored procedures are executed serially in a single thread. This lock-free design eliminates the contention and overhead associated with traditional multi-threaded database engines, allowing VoltDB to process millions of transactions per second per node.

Use Cases for NewSQL

NewSQL databases are not a universal replacement for all other database types. They are a specialized solution for a specific, and growing, class of applications: those that have hit the vertical scaling limits of a traditional SQL database but whose business requirements absolutely cannot tolerate the weaker consistency models of many NoSQL systems. Prime use cases include:

* High-frequency financial trading and payment processing systems.

* Large-scale e-commerce platforms, particularly for order and inventory management.

* Real-time bidding (RTB) platforms in the AdTech industry.

* Globally distributed IoT platforms that require transactional integrity for device data.

* Massively multiplayer online gaming (MMOG) platforms that manage millions of concurrent users and in-game transactions.

The emergence of NewSQL is a direct market response to the inherent limitations of the preceding database paradigms. For decades, the choice was stark: the ACID guarantees of SQL, which struggled to scale horizontally, or the horizontal scalability of NoSQL, which often came at the cost of strict consistency. Architects were forced to choose one or build incredibly complex, custom hybrid systems to work around these limitations. However, a critical class of modern, global businesses—in finance, e-commerce, gaming, and logistics—found this trade-off unacceptable. Their workloads demanded both massive, distributed scale and the unimpeachable transactional integrity of ACID. A lost payment, an incorrect inventory count, or an inconsistent game state at global scale represents a catastrophic business failure. NewSQL systems were engineered specifically to resolve this tension. By re-imagining the relational database for a distributed, cloud-native world, they provide a solution that was previously unattainable without massive, bespoke engineering efforts. Thus, NewSQL is not merely a technical improvement; it is a business enabler that unlocks the potential for a new generation of globally distributed, resilient, and consistent applications.

Part IV: Databases in the Wild: Applications, Case Studies, and Strategy

Having explored the philosophical and technical underpinnings of SQL, NoSQL, and NewSQL, this final section grounds the analysis in the real world. By examining practical applications, company case studies, and strategic considerations, we can synthesize these concepts into an actionable framework for choosing the right database technology.

When to Bet on SQL: The Realm of Unquestionable Integrity

Despite the rise of alternatives, the traditional relational database remains the default and often the best choice for a vast range of applications, particularly those where data integrity is the highest priority.

* Ideal Use Cases:

* Financial and Banking Systems: The bedrock of the global economy runs on relational databases. Applications for banking, accounting, and financial reporting demand the strict ACID compliance that SQL guarantees to ensure every transaction is processed with perfect accuracy.

* E-commerce Transaction Engines: The core functionality of an e-commerce site—managing customer accounts, processing orders, handling payments, and tracking inventory—relies on consistent, relational data. SQL ensures that an order is never processed without a valid payment and that inventory levels are updated accurately and atomically.

* Enterprise Systems (CRM, ERP): Core business applications like Customer Relationship Management and Enterprise Resource Planning are built around well-defined, highly structured data with complex interrelationships. The relational model is perfectly suited to manage this complexity.

* Companies Using SQL: The list of companies relying on SQL is extensive and includes nearly every major enterprise. Financial giants like Morgan Stanley and JP Morgan Chase use Microsoft SQL Server for their core operations. Even technology leaders who are famous for their use of NoSQL at the edge still rely on SQL for critical backend systems; for example, Netflix uses SQL for its billing and employee data, and Uber and Amazon use it for essential corporate data management.

* Case Study Vignette: A Typical E-commerce Backend

Consider a simplified e-commerce database with three tables: Customers (with customer_id, name, address), Products (with product_id, name, price), and Orders (with order_id, customer_id, product_id, quantity). This relational structure is ideal for answering critical business questions. For instance, to find "all orders for the 'SuperWidget' placed by customers in California," a developer can write a single, declarative SQL query using JOINs to link the three tables. This query is efficient, easy to understand, and leverages the database's core strength in managing relationships. Trying to model this in a non-relational way would introduce unnecessary complexity and data duplication, demonstrating why SQL remains the superior choice for applications built on inherently relational data.

When to Embrace NoSQL: The Frontier of Scale and Flexibility

NoSQL databases are the trailblazers for applications operating at the frontier of modern data challenges, where massive scale, extreme velocity, and data model flexibility are the primary concerns.

* Ideal Use Cases:

* Big Data & Real-Time Analytics: NoSQL systems are purpose-built to ingest, store, and process massive, high-velocity data streams from sources like clickstreams, ad impressions, and social media feeds.

* Content Management & Mobile Applications: The flexible schema of document databases is ideal for managing diverse, user-generated content like blog posts, images, and videos. It allows for rapid feature development in mobile apps where requirements evolve quickly.

* Internet of Things (IoT): Wide-column stores like Cassandra excel at handling the relentless firehose of time-series data generated by billions of connected sensors and devices.

* Recommendation Engines & Personalization: Graph databases are the undisputed champions for modeling complex relationships to provide real-time, personalized recommendations. They can instantly answer queries like "users who bought this also bought..." by traversing the graph of user and product interactions.

* Companies Using NoSQL: Many of the world's largest digital platforms are built on NoSQL. Netflix famously uses a combination of SimpleDB, HBase, and Cassandra to ensure high availability and scalability for its massive streaming service. Uber leverages Riak's distributed key-value model to power its real-time ride-matching system. Forbes migrated its website to MongoDB Atlas to achieve faster development cycles and lower ownership costs. Amazon uses its own DynamoDB, a key-value and document database, to power its massive e-commerce product catalog, a use case that demands high availability and low latency.

* Case Study Vignette: Recommendation Engine with a Graph Database

Let's model a simple recommendation system using a graph structure. We can define nodes for (:User), (:Product), and (:Category). The relationships between them are represented by edges, such as (User)-->(Product) and (Product)-->(Category). With a graph database like Neo4j, answering a complex recommendation query is remarkably straightforward. To find "products frequently bought by other users who also bought the same products as User A," a developer can write a concise Cypher query that traverses these relationships. The query would start at the node for User A, find the products they bought, find other users who also bought those products, and then find what other products those users bought. This type of multi-hop, relationship-heavy query is exactly what graph databases are optimized for and would require multiple, slow, and expensive JOIN operations in a traditional SQL database.

Polyglot Persistence: The Hybrid Reality

The most sophisticated and effective modern architectures rarely make an "either/or" choice. Instead, they practice polyglot persistence, a strategy of using multiple database technologies within a single application, leveraging the unique strengths of each for different tasks. This pragmatic approach acknowledges that no single database can be the best at everything.

* Case Study Deep Dive: How Reddit Works

Reddit's architecture is a quintessential example of polyglot persistence in action. The platform must handle both mission-critical, structured data and a massive volume of high-velocity, ephemeral data. It solves this by using a hybrid model:

* The SQL Core (System of Record): The foundational data of the platform—user accounts, subreddit information, posts, and comments—is stored in a robust relational database (PostgreSQL). This data is highly relational and requires strong integrity guarantees. Using SQL ensures that this core data is consistent, reliable, and can be queried in complex ways to support the site's features.

* The NoSQL Edge (High-Velocity Data): The enormous volume of less-critical, high-throughput data is offloaded to specialized NoSQL systems. User votes (upvotes/downvotes) are handled by Apache Cassandra, a wide-column store designed for massive write scalability. It is acceptable if a vote is occasionally lost or delayed (violating strict ACID properties), but it is unacceptable to lose a user's post. Caching for frequently accessed data is handled by Redis, an in-memory key-value store that provides lightning-fast reads. This strategy offloads immense read and write traffic from the primary PostgreSQL database, allowing it to perform optimally on the tasks for which it is best suited.

This hybrid model demonstrates a mature architectural philosophy: use the right tool for the job. SQL is used for the durable system of record, while various NoSQL databases are used for specialized, high-velocity tasks where their unique performance characteristics provide a decisive advantage.

The decision to migrate from a monolithic SQL architecture to a NoSQL or hybrid system is one of the most significant undertakings in software engineering. It is not a simple "lift-and-shift" of data but a fundamental re-architecting of the application itself. A common reason for migration failure is not a flaw in the technology, but a failure to appreciate this distinction. As illustrated in a case study of a social media platform migrating to Amazon DynamoDB, the process requires a complete redesign of the data model around the application's specific access patterns. You do not simply move tables; you must denormalize data and create new structures optimized for NoSQL's query capabilities.

This necessity is echoed in countless discussions among experienced developers. A team cannot simply replace a SQL database with a NoSQL one and expect the application to work, because core relational features like JOINs and database-level constraints are lost. The application logic must be rewritten to handle these tasks. For example, the design philosophy of a database like Cassandra is explicitly "request-based," meaning developers are expected to design their data tables around the specific queries they will run, often creating multiple, denormalized tables containing duplicate data to serve different query patterns. Therefore, a successful migration hinges on deep, upfront collaboration between database and application teams and a willingness to completely rethink how the application interacts with its data from the ground up.

Conclusion: A Strategic Framework for Your Next Project

The database landscape has evolved from a monarchy ruled by SQL into a vibrant, cooperative ecosystem of specialized tools. The most resilient, scalable, and powerful modern applications are not built on technological dogma but on a pragmatic understanding of the trade-offs inherent in each choice. The future is hybrid, where SQL, NoSQL, and NewSQL systems work in concert, each playing to its strengths.

Recap of Core Trade-offs

The decision between these database paradigms boils down to a series of fundamental trade-offs:

* Structure vs. Flexibility: SQL's predefined schema enforces data integrity at the cost of rigidity. NoSQL's dynamic schema offers development speed and flexibility at the cost of pushing data validation to the application layer.

* Vertical vs. Horizontal Scalability: SQL traditionally scales up on a single server, which is simple but has cost and physical limits. NoSQL is designed to scale out across clusters of commodity servers, offering near-infinite, cost-effective scalability.

* Consistency vs. Availability: SQL's ACID guarantees provide strong, immediate consistency, which is critical for transactional systems. NoSQL's BASE model often prioritizes high availability, accepting eventual consistency, which is suitable for many web-scale applications.

A Decision-Making Checklist

To guide architects, developers, and technology leaders in making the right choice for their next project, the following checklist provides a strategic framework. Answering these questions will illuminate the most appropriate path forward.

* What is the nature of your data structure?

* Is your data highly structured, with clear, stable relationships that fit neatly into tables (e.g., customers, orders, products)? If so, SQL is the natural starting point.

* Is your data unstructured, semi-structured, or rapidly evolving with no predictable schema (e.g., user-generated content, IoT logs, JSON APIs)? If so, NoSQL is a strong contender.

* What are your consistency requirements?

* Does your application involve financial transactions or other operations where data must be 100% accurate and consistent at all times? Is ACID compliance a business necessity? If yes, lean towards SQL or NewSQL.

* Can your application tolerate momentary inconsistencies? Is high availability and partition tolerance more critical than immediate, strong consistency (e.g., a social media feed)? If yes, a NoSQL database designed for eventual consistency is likely suitable.

* What are your scalability and performance needs?

* What is your expected user load, data volume, and transaction throughput? Will your application need to scale beyond the capacity of a single, powerful server? If you anticipate massive scale and require horizontal, distributed scaling, NoSQL or NewSQL are the primary candidates.

* Is your workload primarily read-heavy or write-heavy? Different databases are optimized for different patterns. For example, a key-value store like Redis excels at simple reads, while a wide-column store like Cassandra is built for high-velocity writes.

* What are your application's primary query patterns?

* Will your most frequent and critical queries require combining data from multiple entities using complex JOINs and aggregations? If so, SQL's relational query engine is purpose-built for this.

* Are your queries primarily simple key-based lookups, traversals of highly connected data (a graph), or full-text searches on documents? If so, a specialized NoSQL database (Key-Value, Graph, or Document, respectively) will likely offer superior performance.

* What are the constraints and skills of your development team and ecosystem?

* What is the existing database expertise within your team? The vast talent pool and mature tooling for SQL can reduce risk and training overhead.

* How important is speed of iteration? The schema flexibility of NoSQL can accelerate agile development cycles.

* Do you have the operational expertise to manage a complex, distributed system, or would the simplicity of a managed, single-node SQL database (or a fully managed NewSQL/NoSQL service) be more appropriate?

By thoughtfully considering these five dimensions—Data Structure, Consistency, Scale, Query Patterns, and Team/Ecosystem—organizations can move beyond the simplistic "SQL vs. NoSQL" debate and make strategic, data-driven decisions that will serve as a solid foundation for their applications, both today and in the future.

Abhishek Nazarkar

University: Sri Balaji University, Pune

School: School of Computer Studies

Course: BCA (Bachelor of Computer Applications)

Interests: NoSQL, MongoDB, and related technologies

Well Explained!

ReplyDeleteThank you

DeleteNICE WORK

ReplyDeleteGreat Blog & Good Job..

ReplyDeleteWell explained

ReplyDeleteGood job

ReplyDeletePerfect

ReplyDeleteThank you

ReplyDeleteWell Explained!

ReplyDelete