Text Indexing and Search

Text Indexing and Search

1) Introduction to the Topic

In the digital age, where data is growing at an exponential rate, finding relevant information quickly has become crucial. Whether you're searching through thousands of documents, browsing websites, or querying a database, text indexing and search plays a vital role. It is the backbone of search engines like Google and the core of many document-based systems such as MongoDB, Elasticsearch, and Lucene.

___________________________________________________________________________________

2) Explanation

Text indexing is the process of converting raw text into a structured format that allows for fast and accurate retrieval. Instead of scanning every document during a search, the system uses the index to locate relevant documents quickly.

Key concepts in text indexing:

- Tokenization: Splitting the text into individual words or terms (called tokens).

- Normalization: Removing case sensitivity, punctuation, or stop words (like "and", "the").

- Inverted Index: A mapping from terms to the documents that contain them.

- Ranking Algorithms: (e.g., TF-IDF or BM25) used to rank the results based on relevance.

___________________________________________________________________________________

3) Procedure

Let's build a simple text indexing and search system using Python and the Whoosh library.

Requirements: pip install whoosh

___________________________________________________________________________________

Steps:

1. Create a Schema for the documents.

2. Index the Documents into a searchable format.

3. Search the Index using keywords.

___________________________________________________________________________________

Code Example:

from whoosh.index import create_in from whoosh.fields import Schema, TEXT from whoosh.qparser import QueryParser import os

# Step 1: Define schema schema = Schema(title=TEXT(stored=True), content=TEXT(stored=True))

# Step 2: Create index directory if not os.path.exists("indexdir"): os.mkdir("indexdir")

# Step 3: Create an index ix = create_in("indexdir", schema) writer = ix.writer()

# Step 4: Add documents writer.add_document(title="Hello World", content="This is the first example of text indexing.") writer.add_document(title="Whoosh Library", content="Whoosh is a fast and featureful pure Python search engine library.") writer.commit()

# Step 5: Search the index from whoosh.index import open_dir ix = open_dir("indexdir") with ix.searcher() as searcher:

query = QueryParser("content", ix.schema).parse("search engine") results = searcher.search(query) for result in results:

print(result['title'], "-", result['content'])

___________________________________________________________________________________

4) Screenshot :

_________________________________________________________________________________

5) Future Scope

Text indexing and search systems have immense potential in various domains:

- Search Engines: Powering Google, Bing, and others.

- Library & Academic Systems: Organizing and searching through massive digital libraries.

- Chatbots and NLP Apps: Quick response retrieval from training datasets.

- Enterprise Knowledge Bases: Employees can quickly find relevant documents.

- Mobile Apps: Offline or local search in notes, documents, and chat histories.

With AI and machine learning integration, future indexing systems will not only find keywords but will understand intent and context, offering more accurate and personalized results.

💬 "I enjoy exploring how technology works behind the scenes and aim to build a career in protecting digital systems while learning something new every day."

___________________________________________________________________________________

Tanmay Deshmukh

University: Shree Balaji University, Pune

School: School of Computer Studies

Course: BCA (Bachelor of Computer Applications)

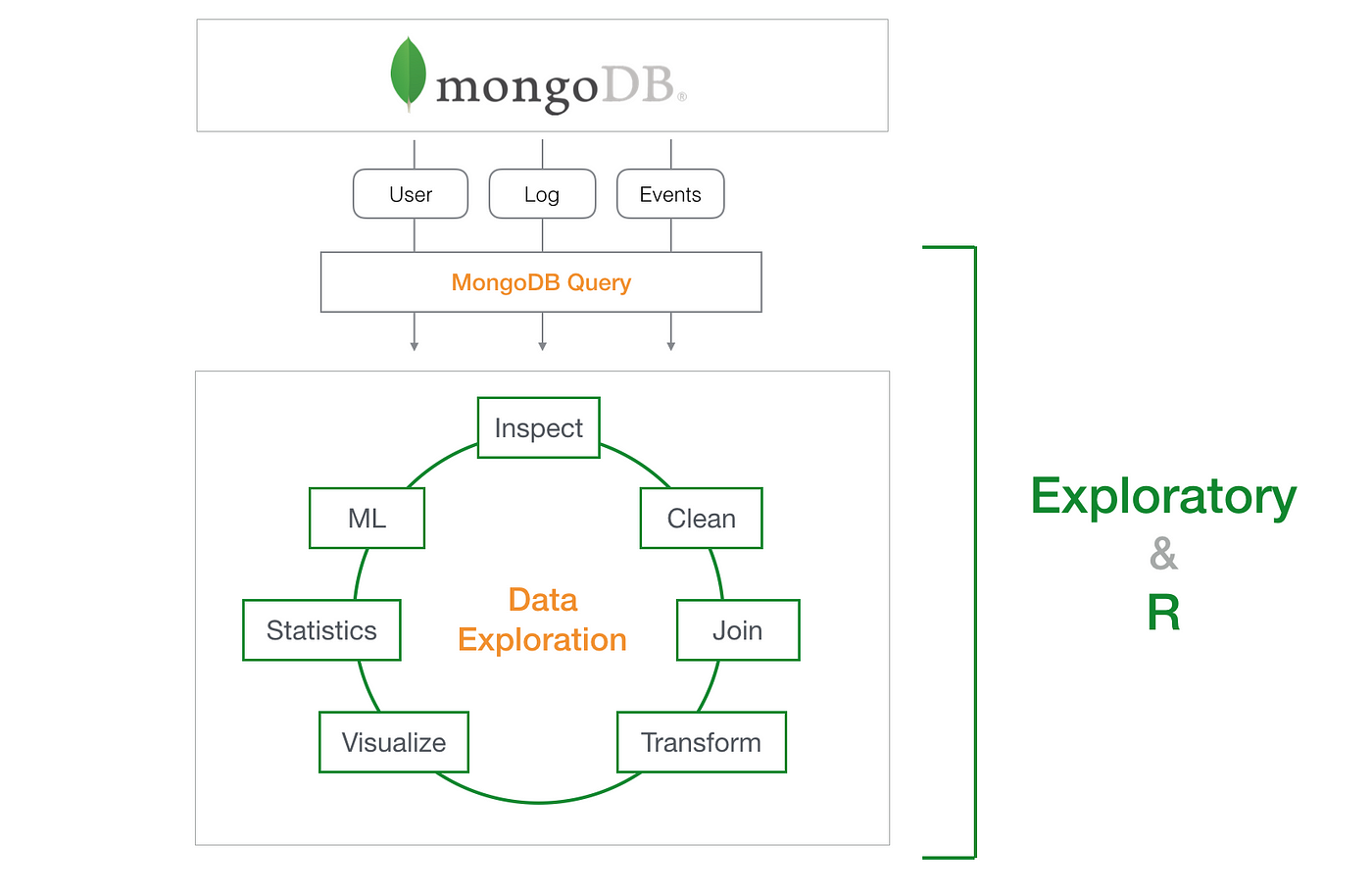

Interests: NoSQL, MongoDB, and related technologies

Good blog 👍

ReplyDeletevery informative

ReplyDeletenicely written👍

ReplyDelete👍nice

ReplyDeleteAwesome Great Work

ReplyDelete